How AI Enhances A/B Testing for Dynamic Content

AI has transformed A/B testing by making it faster, smarter, and more efficient. Traditional methods often take weeks to deliver results, struggle with complex variables, and fail to keep up with shifting user preferences. AI-powered tools solve these issues by automating hypothesis generation, reallocating traffic in real-time, and continuously refining content based on live data.

Key benefits include:

- Faster results: Up to 80% quicker than manual testing.

- Improved conversion rates: Companies report boosts of 15–25%.

- Dynamic traffic allocation: AI directs users to better-performing content during tests.

- Multi-variable testing: Handles multiple elements like headlines, CTAs, and layouts simultaneously.

AI tools such as VWO, Kameleoon, and Inbox Agents simplify testing by analyzing user behavior, predicting high-performing elements, and customizing content in real-time. However, challenges like data privacy, algorithmic bias, and resource demands require careful planning and human oversight to maximize effectiveness.

AI-powered A/B testing is reshaping content optimization, helping businesses achieve faster, data-driven insights while tailoring experiences to user needs.

AI in A/B Testing and Experimentation with Nils Stotz

Key AI Features in Dynamic Content A/B Testing

AI has completely changed the game for A/B testing, offering tools that make content optimization quicker, smarter, and more efficient. Let's take a closer look at how some of these features - like automated hypothesis generation - are reshaping the way businesses refine their content.

Automated Hypothesis Generation

One of AI’s standout abilities is predicting which content elements will perform best. Instead of relying on trial and error, AI dives into historical data - like conversion rates, click-through rates, engagement stats, and user segmentation - to pinpoint the variables most likely to succeed. For instance, OptiMonk’s Smart A/B Testing tool can recommend specific landing page elements to tweak, based on these insights. It might reveal that mobile users prefer concise headlines, while desktop users engage more with detailed product descriptions. What used to take weeks of analysis now happens in minutes.

AI also prioritizes the elements that historically deliver the biggest wins. For example, it might suggest focusing on call-to-action buttons over headline changes. By cutting out the guesswork, AI ensures each test is optimized for maximum results. One real-world example? A company combined headline and CTA adjustments and saw a 15% boost in conversions.

Real-Time Traffic Allocation

Traditional A/B testing splits traffic evenly between variations for the entire test period. AI, on the other hand, takes a smarter approach by monitoring performance in real time and reallocating traffic to the best-performing options. This dynamic adjustment means fewer wasted impressions and quicker results. Businesses running high-traffic or time-sensitive campaigns can start seeing benefits in just hours or days.

AI uses metrics like statistical confidence to guide these changes, ensuring that decisions are based on solid data. For even more precision, some systems incorporate multi-armed bandit algorithms to fine-tune traffic allocation.

Multi-Armed Bandit Algorithms for Optimization

Multi-armed bandit algorithms take A/B testing to the next level. These machine learning techniques balance two goals: maximizing traffic to the best-performing variations (exploitation) while still testing new options (exploration). This approach not only speeds up testing but also reduces losses from underperforming content.

For dynamic content, where user preferences can shift quickly, these algorithms adapt in real time. They don’t just rely on past performance; they adjust based on what’s working right now. In one case, a B2B SaaS company cut its test duration by 40% using this method compared to manual testing. Plus, these algorithms handle multiple variables - like headlines, images, and CTAs - simultaneously, simplifying the entire optimization process.

Together, these AI-driven features create a flexible and responsive testing environment that evolves alongside user behavior.

Benefits of AI-Powered A/B Testing Backed by Research

AI is reshaping the way A/B testing is conducted, offering not just faster results but also delivering tailored user experiences. Research highlights major improvements in both efficiency and outcomes.

Faster and More Accurate Testing

AI dramatically shortens the time required to complete tests. Studies reveal that AI-powered platforms can produce conclusive results up to 80% faster than traditional methods.

Unlike traditional approaches that rely on basic post-test evaluations, AI dives deeper, identifying intricate patterns in user behavior that might go unnoticed by human analysts. Machine learning algorithms continuously adapt, enabling highly accurate predictions tailored to diverse user segments.

Take SentinelOne, a cybersecurity firm, as an example. They leveraged AI-driven A/B testing to refine their landing pages. The outcome? A 25% boost in conversions and a 30% reduction in design time. The AI system pinpointed the most effective combinations of creative elements - an effort that would have taken months for their team to accomplish manually.

Improved Engagement and Conversion Rates

Building on its speed and precision, AI also enhances user engagement by dynamically customizing content. Companies that transition from traditional testing to AI-driven methods often see substantial improvements in critical metrics.

Projections indicate that AI adoption in A/B testing will grow from 5% in 2021 to 30% by 2025. Additionally, the global market for A/B testing tools is expected to hit $1.08 billion by 2025, fueled by the increasing demand for AI-enabled solutions.

The real power of AI lies in its ability to personalize content in real time. Instead of showing the same variation to all users, AI systems adjust elements like headlines, call-to-action buttons, and images based on individual behaviors and preferences. This level of customization often leads to higher click-through rates, longer site visits, and better conversion rates.

AI also facilitates continuous, real-time optimization that evolves alongside changing user behaviors and market trends. Next, we’ll examine specific tools - including how Inbox Agents incorporates AI-powered testing - to make these transformative benefits accessible.

Traditional vs. AI-Powered A/B Testing: A Comparison Table

Here’s a breakdown of how traditional and AI-driven A/B testing stack up across key metrics:

| Feature | Traditional A/B Testing | AI-Powered A/B Testing |

|---|---|---|

| Test Setup | Manual, static variants | Automated, dynamic, multiple variants |

| Hypothesis Generation | Human-driven, intuition-based | AI-driven, data-informed, automated |

| Traffic Allocation | Fixed split (e.g., 50/50) | Dynamic, real-time optimization |

| Data Analysis | Basic, post-test only | Deep, real-time, predictive |

| Time to Results | Weeks to months | Days to weeks (up to 80% faster) |

| Conversion Rate Improvement | Incremental, slower gains | Faster, significant improvements (+25% typical) |

| Design Time | High, manual effort required | Reduced by 30% through automation |

| Scalability | Limited by manual effort | High, supports complex multi-variable tests |

sbb-itb-fd3217b

AI Tools and Platforms for Dynamic A/B Testing

AI-driven A/B testing has revolutionized how businesses approach optimization, automating complex processes and delivering actionable insights in record time. Below, we’ll dive into platforms that showcase how AI is transforming A/B testing.

Overview of AI-Powered A/B Testing Tools

Platforms like VWO and Kameleoon stand out with comprehensive testing capabilities, ranging from automated hypothesis generation to real-time traffic allocation. Powered by machine learning, they can recommend the best-performing elements for optimization.

SuperAGI takes a more developer-focused approach, offering dynamic variable testing through APIs. This allows teams to customize optimization systems, tweaking elements like font sizes, button colors, or image types based on real-time data.

The growing adoption of these tools reflects their impact. The A/B testing tools market is expected to hit $1.08 billion by 2025, driven by platforms that go beyond static comparisons to enable continuous, adaptive optimization as user behavior evolves.

What sets these platforms apart is their shared ability to:

- Automatically generate test hypotheses by analyzing user interactions and site data.

- Dynamically allocate traffic to top-performing variations as trends emerge.

- Test multiple variations simultaneously, streamlining the experimentation process.

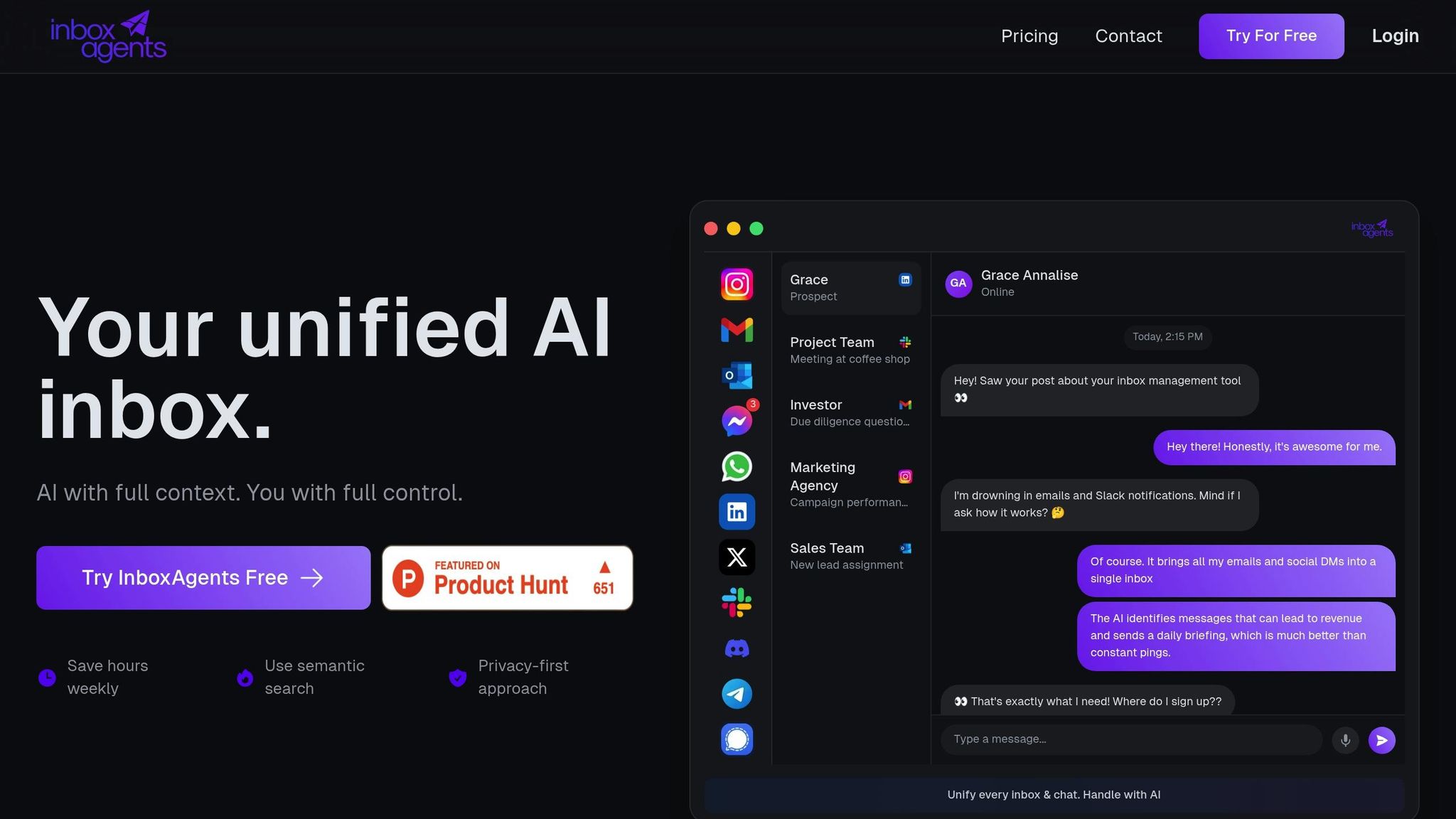

How Inbox Agents Supports AI-Powered Testing

While most platforms focus on websites and email campaigns, Inbox Agents brings AI-powered testing into the realm of messaging channels, transforming how businesses communicate with customers.

One standout feature is automated inbox summaries, which lets teams test different summary formats and lengths. For example, businesses can experiment with text versus audio summaries or compare detailed formats to concise ones, helping customer service teams respond more efficiently.

The smart replies functionality allows testing of AI-generated response templates across various customer scenarios. This helps businesses pinpoint the phrasing, tone, and style that best connect with specific customer groups.

With personalized responses, Inbox Agents takes testing a step further by dynamically adapting messages based on customer data like purchase history or behavior patterns. Additionally, its automated outreach feature supports testing of different messaging sequences, timing strategies, and call-to-action formats, helping businesses optimize engagement and response rates.

By unifying messaging channels, Inbox Agents eliminates fragmentation, providing a centralized view of content performance across platforms.

Tool Comparison Table

Here’s a breakdown of some leading AI-powered A/B testing platforms and their features:

| Platform | Dynamic Content Testing | Automated Creative Optimization | Real-Time Traffic Allocation | Integration Capabilities | Pricing Model | Best For |

|---|---|---|---|---|---|---|

| VWO | Yes | Yes | Yes | Integrates with tools like Google Analytics, Mixpanel | Subscription plans starting at $200/month | General CRO, e-commerce |

| Kameleoon | Yes | Yes | Yes | Connects with major analytics tools and CRM systems | Subscription plans starting at $300/month | Retail, SaaS companies |

| SuperAGI | Yes (via API) | Yes (custom models) | Yes | Custom integrations for developers | API-based pricing, custom quotes | Tech-savvy teams, developers |

| Inbox Agents | Yes (messaging focus) | Yes (smart replies, summaries) | Yes | Unifies messaging platforms in one interface | Tiered plans (Basic, Professional, Enterprise) | Customer communications, support teams |

The pricing models reflect the diverse needs of businesses. While enterprise solutions often require custom quotes, smaller businesses can explore entry-level plans with basic AI features. Free trials are also common, giving teams a chance to test capabilities before committing.

Integration flexibility is another critical factor. Tools like VWO and Kameleoon excel in connecting with existing analytics and marketing systems. Meanwhile, SuperAGI offers developers extensive customization options. Inbox Agents brings a unique advantage by consolidating customer communication channels, making it a go-to option for businesses prioritizing seamless, multi-channel optimization.

Limitations and Best Practices for AI-Powered A/B Testing

AI is reshaping how businesses approach A/B testing, offering tools to uncover insights and optimize performance. However, implementing these systems isn't without its hurdles. Understanding the challenges and following proven practices can make all the difference in achieving reliable outcomes.

Challenges of AI-Powered A/B Testing

Data privacy concerns are one of the most pressing issues. With regulations like GDPR and CCPA, companies must tread carefully when handling user data. AI systems require significant access to data to provide actionable insights, making robust privacy measures and compliance strategies essential.

Another challenge is algorithmic bias, which can distort results and lead to unfair outcomes. AI often reflects the biases present in its training data. For example, if historical data contains demographic biases, the AI might reinforce these patterns instead of providing fair and accurate optimization insights.

The resource demands of AI-powered solutions can also be a stumbling block, especially for smaller businesses. High computational power, specialized knowledge, and ongoing maintenance drive up costs, making these systems less accessible.

Data quality issues are another critical limitation. AI systems rely on accurate, up-to-date information to generate meaningful results. Poor-quality data - whether incomplete, outdated, or inaccurate - can lead to flawed insights. Additionally, overreliance on AI without human oversight can result in decisions that miss the broader business context or strategic goals.

Addressing these limitations is crucial for businesses looking to unlock the full potential of AI in A/B testing.

Best Practices for Success

To get the most out of AI-powered A/B testing, businesses should start by setting clear, measurable objectives. Goals like improving conversion rates or boosting engagement metrics should align with broader business outcomes. Monitor these objectives continuously and adjust based on AI insights and stakeholder input.

Conducting regular bias audits is essential to ensure fairness. Train AI models with diverse datasets and use fairness metrics to evaluate their decision-making. This proactive approach helps catch issues early, preventing them from affecting results.

Human validation of AI recommendations is another key practice. By comparing predicted outcomes with real-world results, businesses can ensure AI-driven insights align with their goals. For instance, track performance indicators like sales, customer satisfaction, or engagement to verify the AI's effectiveness.

For platforms focused on messaging, like Inbox Agents, prioritizing privacy is critical. The company highlights their approach:

"InboxAgents uses a privacy-first approach with encryption in transit and at rest, strict access controls, and industry-standard security practices. We never use your messages for advertising or to train generalized AI models. We comply with GDPR, CCPA, and Google API Services policies. All data processing is done solely to provide the features you enable."

Equally important is allowing user control over automation. Inbox Agents emphasizes this flexibility:

"You're always in control. InboxAgents allows you to customize automation levels for different types of messages, senders, or platforms. You can set certain contacts or topics to always require manual review while allowing others to be handled automatically."

Finally, maintain human oversight and monitor AI outputs continuously. This approach helps identify and correct issues like overfitting or underfitting, ensuring the AI remains effective and aligned with business needs.

Pitfalls vs. Solutions Table

Here’s a quick look at common challenges and how to address them:

| Common Pitfall | Impact | Recommended Solution |

|---|---|---|

| Data Privacy Violations | Legal risks, loss of trust | Use encryption, strict access controls, and ensure GDPR/CCPA compliance |

| Algorithmic Bias | Skewed results, unfair outcomes | Train with diverse datasets and conduct regular bias audits |

| Overreliance on AI | Poor decisions, missed context | Validate AI insights with human oversight and track real-world performance |

| Insufficient Resources | Implementation failures | Invest in scalable infrastructure and provide staff training on AI |

| Poor Data Quality | Flawed recommendations | Set data quality standards and perform regular validations |

| Lack of Clear Objectives | Misaligned outcomes, wasted effort | Define measurable goals and align them with key performance indicators (KPIs) |

A great example of success comes from SentinelOne, a cybersecurity company. By addressing these pitfalls, they used AI-powered A/B testing to boost their conversion rate by 25% and cut design time by 30%. Their approach highlights how careful planning and validation can turn challenges into opportunities.

Conclusion and Key Takeaways

AI is revolutionizing A/B testing, turning what was once a slow and manual process into a dynamic, data-driven optimization tool.

The numbers speak for themselves: the A/B testing tools market is expected to hit $1.08 billion by 2025, and AI adoption in testing is projected to grow from 5% in 2021 to 30% by the same year. Companies using AI-powered testing are seeing results up to 80% faster than traditional methods, along with noticeable gains in conversion rates and overall efficiency.

One standout advancement is real-time adaptability. Unlike traditional A/B testing, which splits traffic evenly and takes weeks to deliver statistically significant results, AI-driven systems allocate traffic dynamically based on performance. They can test multiple variables at once and optimize continuously during the testing phase. This approach minimizes the risk of revenue loss from underperforming variations.

For messaging channels, tools like Inbox Agents highlight how AI enhances testing across unified interfaces. By evaluating response rates, engagement metrics, and conversion data in real time, businesses can refine their communication strategies on the fly. This is especially valuable for organizations managing high volumes of customer interactions.

AI also shifts the paradigm from static assets to systems that evolve continuously. Instead of the traditional "launch and leave" approach, businesses can now implement weekly updates based on real-time learning from user behavior. This ability to adapt quickly is critical for staying ahead in today’s fast-moving markets.

That said, success with AI-powered A/B testing requires the right balance. Automation must work hand-in-hand with human oversight to ensure high-quality data and clear, goal-oriented strategies. When AI insights are paired with thoughtful human validation, the results can be game-changing.

FAQs

How does AI-driven A/B testing address data privacy concerns under regulations like GDPR and CCPA?

AI-driven A/B testing offers a way for businesses to stay in line with data privacy regulations like GDPR and CCPA by relying on anonymized and aggregated datasets. This approach minimizes the exposure of personal information during testing, significantly lowering the chances of privacy violations.

Moreover, many AI tools are built to adhere to strict data management practices. These often include limiting how long data is stored and ensuring transparency about how data is processed. To maintain compliance and uphold user trust, businesses should also conduct regular audits of their AI systems to confirm they meet regulatory standards.

What are multi-armed bandit algorithms, and how do they improve A/B testing for dynamic content?

Multi-armed bandit algorithms are a type of machine learning designed to fine-tune decision-making on the fly. Unlike standard A/B testing, where traffic is evenly split and results take time to achieve statistical significance, these algorithms adjust traffic allocation dynamically. They send more users to the better-performing options as the test unfolds.

This method improves A/B testing by shortening the time it takes to identify top-performing content and reducing missed opportunities caused by sticking with underperforming variations. When dealing with dynamic content, these algorithms shine even brighter - they quickly adapt to shifts in user behavior, ensuring your audience consistently experiences the most relevant and engaging content.

How can businesses use AI to ensure A/B testing results are accurate and free from bias?

To get accurate and fair results from AI-driven A/B testing, it's crucial for businesses to start with diverse, high-quality datasets that truly represent their target audience. This reduces the risk of bias in the algorithms and ensures the insights are grounded in real-world behavior.

Alongside this, companies should make it a priority to audit and monitor AI performance regularly. Human oversight plays a key role in verifying outcomes and fine-tuning strategies when necessary. By aligning AI tools with clear objectives and ethical guidelines, businesses can unlock the full potential of A/B testing for creating dynamic and impactful content.