How AI Generates Privacy-Safe Synthetic Data

AI-generated synthetic data creates artificial datasets that mimic the statistical properties of real data while removing personal or sensitive information. This ensures privacy and compliance with regulations like GDPR or HIPAA. Here's how it works:

- Data Preparation: Original datasets are cleaned by removing identifiers (e.g., names, emails) and standardizing formats.

- AI Models: Tools like GANs and VAEs generate synthetic records based on the patterns in the original data.

- Privacy Protections: Techniques like differential privacy add noise to prevent re-identification of individuals.

- Testing: Synthetic data is rigorously tested for accuracy and security against privacy attacks.

Synthetic data is widely used in industries like healthcare, finance, and AI training, offering a secure way to analyze and share data without compromising privacy. Future advancements include real-time generation and blockchain integration for added security.

How to generate privacy-preserving synthetic data?

How AI Creates Privacy-Safe Synthetic Data

Privacy-safe synthetic data takes sensitive information and transforms it into artificial datasets. This process is designed to retain the utility of the data while safeguarding individual privacy. Achieving this balance requires careful planning, advanced AI models, and strong privacy protections.

Data Collection and Preprocessing

The process begins with collecting and cleaning the original dataset. This involves removing direct identifiers like names, Social Security numbers, email addresses, and phone numbers from the raw data. But it doesn’t stop there - data preprocessing dives deeper.

Beyond removing identifiers, preprocessing ensures the data is consistent and ready for training. Formats are standardized, missing values are addressed, and ranges are normalized. For instance, dates might be converted to a uniform format, categorical variables encoded numerically, and outliers identified and adjusted.

Another key step in this stage is data profiling, which analyzes the dataset's statistical properties, distributions, and relationships. This helps determine the best privacy protection methods and the level of noise the data can handle while still being useful.

Training AI Models

AI models like Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs) are often used to create synthetic data. GANs operate by having two neural networks - one generates synthetic records, and the other tries to distinguish them from real ones. The training continues until the synthetic data closely resembles the real dataset.

VAEs take a different path. They compress the input data into a lower-dimensional representation and then use this to generate new samples. This approach offers more control over the data generation process and tends to be more stable during training.

To ensure the models don’t memorize the original data, careful hyperparameter tuning is essential. Once the models are trained, privacy protection methods are added to shield the synthetic data from potential disclosure risks.

Adding Privacy Protection Methods

One of the most widely used techniques is differential privacy, which introduces calibrated noise into the training process or the outputs. This ensures that individual records cannot be identified. The strength of this protection depends on the epsilon (ε) parameter, which balances privacy and utility. A lower epsilon provides stronger privacy but may reduce the data's usefulness, while a higher epsilon preserves more accuracy but with less privacy.

Other noise injection techniques can also enhance privacy. These might include adding Gaussian noise to numerical data, making controlled variations in categorical distributions, or applying k-anonymity constraints to limit the chances of re-identification.

Additional safeguards, like gradient clipping and privacy accounting, are implemented to defend against advanced attacks, such as membership inference.

Testing Data Quality and Privacy

Once privacy measures are in place, the synthetic data undergoes rigorous testing to ensure it’s both useful and secure. Statistical tests check that correlations, distributions, and key relationships in the data are preserved. This ensures the synthetic data retains the analytical value of the original dataset.

Privacy testing involves simulating attacks to assess the strength of the protections. These tests might include membership inference attacks, attribute inference attempts, or re-identification efforts using external data sources. Successfully protected synthetic data should resist these attacks while remaining statistically useful.

Finally, the synthetic dataset is benchmarked for performance on real-world tasks and checked for compliance with regulations like GDPR, HIPAA, or CCPA. This includes thorough documentation of the privacy methods used, maintaining detailed audit trails, and setting up procedures for ongoing monitoring to ensure long-term privacy protection.

Understanding Differential Privacy

Differential privacy is a mathematical framework designed to protect sensitive data. Unlike traditional methods that address privacy concerns after data has been collected, differential privacy embeds protection directly into how data is accessed and processed.

What is Differential Privacy?

At its core, differential privacy controls how algorithms interact with a dataset. When users query the dataset, the system adds carefully calibrated noise to the responses based on a parameter called epsilon (ε). This ensures that the removal or addition of a single record doesn’t significantly affect the results. The epsilon value determines the balance between privacy and accuracy, offering a clear trade-off. This approach guarantees that even if multiple queries are made or external information is available, individual records remain hidden. By doing so, differential privacy not only safeguards personal data but also lays the groundwork for advanced privacy techniques in AI systems.

Differential Privacy vs. Standard Anonymization

Differential privacy and traditional anonymization take very different approaches to protecting sensitive information. Here's a quick comparison:

| Feature | Traditional Anonymization | Differential Privacy |

|---|---|---|

| Approach | Alters the dataset (e.g., by erasing, adding noise, aggregating, pseudonymizing, or hashing) and shares the anonymized version. | Keeps the dataset secure, adds noise to query responses, and controls algorithm access. |

| Output Shared | An anonymized dataset is shared. | No dataset is shared - only noisy answers to queries are provided. |

| Re-identification Risk | Vulnerable to re-identification if datasets are updated or compared with others. | Provides mathematically proven protection against re-identification, even with evolving data or repeated queries. |

| Control | Limited control once the anonymized data is released. | Maintains full control over the dataset and the algorithms interacting with it. |

| Use Cases | Best for sharing static datasets with researchers or regulated third parties. | Ideal for dynamic datasets and situations requiring strong, provable privacy safeguards. |

These differences make differential privacy especially effective for dynamic datasets, where traditional anonymization methods often fall short.

New Advances in Privacy Methods

Recent innovations are making differential privacy even more practical and accessible. For instance, differentially private model training allows AI systems to learn from sensitive data without memorizing individual records. By introducing noise during the training process, these models avoid retaining personal information.

In addition, privacy-preserving APIs now automate the application of differential privacy to queries, enabling organizations to enforce strict privacy standards without requiring extensive technical expertise. Another exciting development is the integration of differential privacy with federated learning. This combination allows multiple organizations to collaboratively train shared AI models without revealing their individual datasets. These advancements are transforming differential privacy into a powerful tool for balancing data utility with robust privacy protections.

sbb-itb-fd3217b

Best Practices for Privacy-Safe Synthetic Data

Crafting privacy-safe synthetic data isn't just about choosing the right algorithms - it requires a well-thought-out operational approach to ensure both privacy and usability at every stage of the process.

Setting Privacy Parameters

The concept of epsilon (ε) plays a key role in balancing privacy and accuracy. A lower epsilon value offers stronger privacy protection but may reduce the data's usefulness, and vice versa. It's critical to allocate your "privacy budget" thoughtfully across analyses and to revisit these settings as data evolves or regulations change.

Organizations should test a variety of epsilon values to find the sweet spot for their specific datasets and objectives. Once this balance is achieved, thorough documentation and regular testing can reinforce the effectiveness of these privacy measures.

Documentation and Testing

Keep detailed records of every step in the data preprocessing journey. This includes documenting which fields were modified, anonymized, or excluded. Also, track all data transformations and regularly test privacy using techniques like membership inference attacks to ensure the synthetic data maintains statistical integrity while protecting privacy.

Version control is equally essential, particularly when dealing with multiple iterations of datasets. By tracking the privacy parameters, algorithms, and source data versions used in each iteration, organizations can ensure reproducibility and quickly address any issues. These steps, combined with earlier quality checks, create a robust framework for privacy protection.

Following Data Protection Laws

Synthetic data can help organizations align with regulatory requirements, but compliance demands a thorough understanding of how laws like GDPR, CCPA, and HIPAA treat such data. It's important to ensure that synthetic data processes adhere to principles like data minimization and purpose limitation.

Legal teams should be involved to document how synthetic data practices meet regulatory standards. This includes detailing the technical measures in place to prevent re-identification and demonstrating that synthetic datasets are used responsibly and for legitimate purposes.

Working with AI Platforms

AI platforms increasingly rely on privacy-safe synthetic data to enhance their capabilities while safeguarding user privacy. These platforms need training data to develop features like automated responses, summarization, and spam filtering, but they must do so without compromising sensitive information.

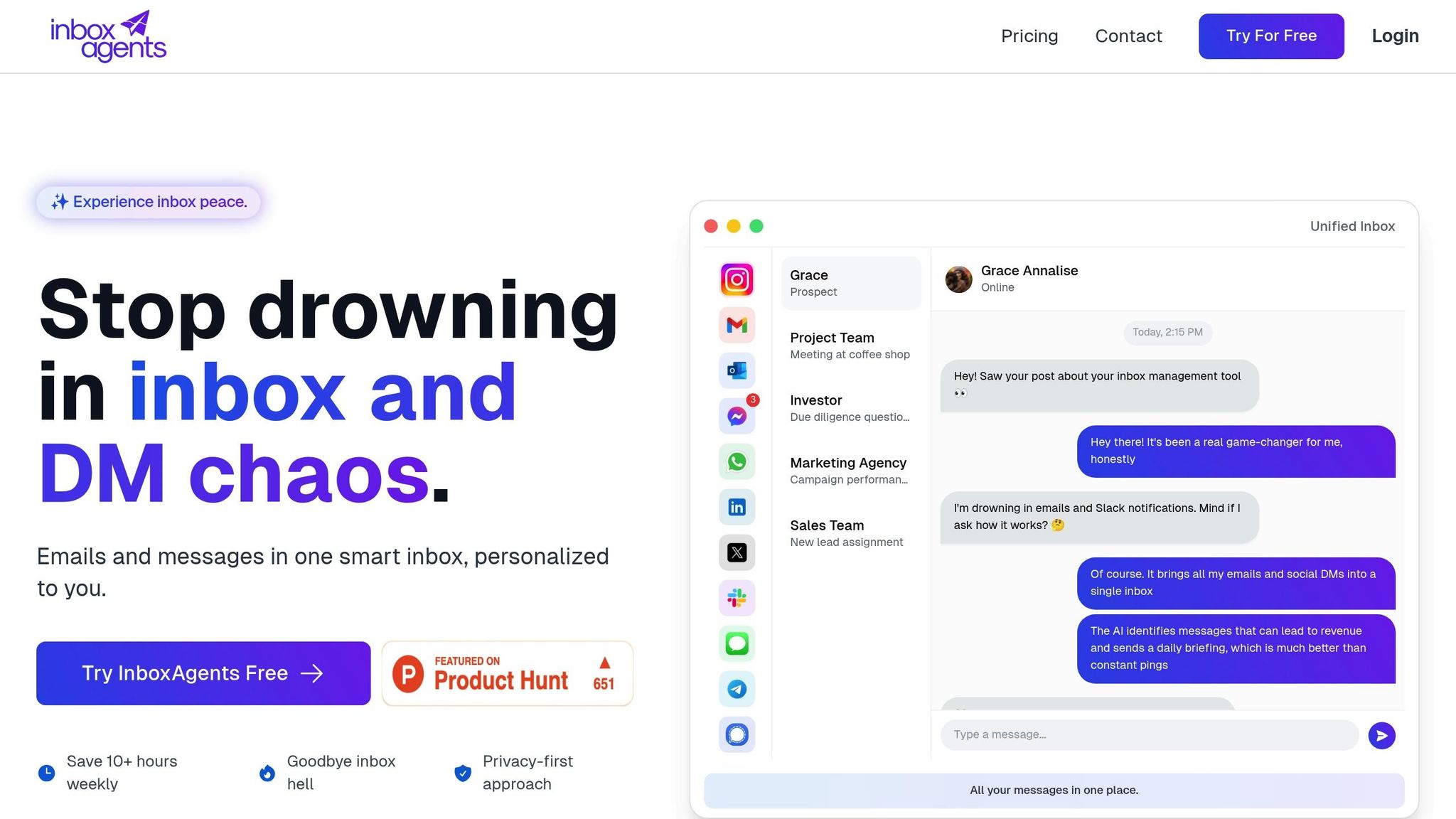

For instance, Inbox Agents uses synthetic data to train AI models that can understand communication patterns and contextual nuances without exposing real user messages. This ensures privacy while enabling the AI to perform effectively.

When selecting AI platforms for privacy-sensitive applications, organizations should evaluate how synthetic data is integrated into training and operations. This ensures strong privacy protections, regulatory compliance, and effective system performance.

Uses and Future of Privacy-Safe Synthetic Data

Privacy-safe synthetic data has transitioned from being a theoretical concept to a practical tool across various industries. It’s now a go-to solution for organizations looking to balance innovation with privacy, particularly in sensitive areas like healthcare and finance.

Industry Use Cases

In healthcare, synthetic data is revolutionizing medical research by enabling compliance with HIPAA regulations. For instance, medical device companies use synthetic patient data to train diagnostic algorithms without needing access to real patient records. This ensures researchers can develop and test treatments while safeguarding patient privacy.

The financial sector employs synthetic data for tasks like fraud detection and risk assessment. Banks simulate transaction data that reflects genuine spending behaviors, allowing them to train machine learning models to detect suspicious activities without exposing actual customer data.

Telecommunications companies rely on synthetic data to enhance network performance and predict usage trends. By crafting synthetic call and data usage records, they can safely test new infrastructure setups and pricing strategies without compromising customer information.

In the automotive industry, synthetic driving data plays a key role in training autonomous vehicle systems. This data replicates diverse scenarios, including various road conditions, weather events, and traffic patterns - situations that would be risky or impractical to gather during real-world testing.

These applications highlight the growing importance of synthetic data in practical, privacy-focused solutions, paving the way for platforms like Inbox Agents to integrate it into messaging systems.

Inbox Agents' Privacy-Safe Messaging Solutions

Inbox Agents showcases how synthetic data can power AI-driven messaging tools while maintaining user privacy. The platform generates synthetic messaging data to train its AI models for features such as automated inbox summaries, smart replies, and negotiation handling.

When developing spam and abuse filters, Inbox Agents uses synthetic examples of harmful messages rather than analyzing real user communications. This ensures users’ private messages remain untouched while still enabling the creation of effective filtering systems.

For message prioritization, the platform trains its AI on synthetic conversation data that mirrors real-world business scenarios and communication styles. This allows the system to assess message importance intelligently without compromising the privacy of actual business interactions.

Future Trends in Synthetic Data

The role of synthetic data continues to evolve, with new trends shaping its potential to safeguard privacy and drive innovation.

Regulatory acceptance of synthetic data is gaining momentum, with governing bodies beginning to recognize it as a legitimate privacy-preserving tool. This could lead to the establishment of formal guidelines and certification processes.

Federated synthetic data generation is emerging as a collaborative method where multiple organizations can create synthetic datasets together without sharing raw data. This approach enables cross-industry insights while respecting privacy boundaries.

The rise of real-time synthetic data generation is transforming how streaming data is handled. Instead of storing sensitive real-time information, systems can generate synthetic equivalents on the fly, reducing both privacy risks and storage demands.

Advancements in quality assessment standards are providing better tools to evaluate synthetic data for both privacy protection and utility, helping organizations ensure their datasets are effective and secure.

Finally, the integration of blockchain technology with synthetic data is unlocking new opportunities for data verification and provenance. This combination allows organizations to verify the authenticity and privacy compliance of their synthetic datasets without exposing the methods used to create them.

As AI models grow more sophisticated, the need for high-quality synthetic training data will only increase. Organizations that excel in generating privacy-safe synthetic data will gain a competitive edge, enabling them to build cutting-edge AI solutions while maintaining trust and meeting regulatory standards.

Conclusion

Privacy-safe synthetic data is reshaping how organizations approach AI development and handle data. By combining AI-driven data generation with techniques like differential privacy, businesses can now extract valuable insights from data without compromising individual privacy or violating regulatory standards.

However, achieving this balance isn’t straightforward. It calls for technical know-how and careful planning. Companies need to fine-tune privacy settings, document their processes meticulously, and stay aligned with ever-changing data protection laws. When done right, this approach paves the way for groundbreaking advancements in AI while maintaining trust and compliance.

Synthetic data is already fueling real-world AI applications across various sectors. For example, Inbox Agents uses synthetic data to train its AI models without exposing sensitive user communications. This enables features like automated summaries, smart replies, and spam filtering - all while safeguarding user privacy.

Looking ahead, advancements in federated synthetic data generation, real-time data creation, and blockchain integration are expected to open up even more opportunities for privacy-safe AI. Organizations that adopt these emerging methods will be better equipped to innovate while navigating complex privacy regulations.

Ultimately, the success of privacy-safe synthetic data hinges on treating privacy as a driver of sustainable AI progress. By blending robust privacy measures with cutting-edge AI, platforms like Inbox Agents are delivering secure and intelligent solutions. This approach empowers businesses to build AI systems that are not only effective and compliant but also foster trust among users and stakeholders.

FAQs

How does AI create synthetic data that protects privacy while remaining useful, and what is the role of the epsilon parameter?

AI generates synthetic data by mimicking the statistical patterns and structure of the original dataset, but without using any actual personal information. This approach keeps the data valuable for analysis while protecting individual privacy.

The epsilon (ε) parameter in differential privacy plays a crucial role in balancing data utility and privacy. A lower epsilon value indicates stronger privacy protection, as it introduces more noise to the data, making it harder to identify individuals. However, this added noise can slightly reduce the accuracy of the synthetic data. Striking the right balance between privacy and utility is essential.

How does differential privacy differ from traditional anonymization methods, and why is it considered more secure?

Traditional methods like k-anonymity and data masking aim to protect individual privacy by removing or generalizing personal identifiers. While these approaches can provide a basic layer of protection, they have a significant weakness: they can be exploited. When combined with other datasets or targeted by sophisticated techniques, individuals can often be re-identified.

Differential privacy takes a different approach, offering mathematical safeguards that significantly reduce the chances of identifying someone, even if the data is analyzed repeatedly or in great detail. This makes it a stronger option for addressing both present and evolving privacy challenges. Its ability to secure data across multiple analyses is one of the main reasons it’s seen as a more reliable solution compared to older methods.

How do AI models like GANs and VAEs create synthetic data, and what are the benefits of using them?

AI models like GANs (Generative Adversarial Networks) and VAEs (Variational Autoencoders) have become essential for creating synthetic data. These models analyze real datasets to learn patterns and then generate new, realistic data that mimics the original - without revealing sensitive information.

Here’s how they work: GANs operate with two networks - a generator and a discriminator - that essentially play a game. The generator creates data, while the discriminator evaluates it, pushing the generator to produce increasingly realistic outputs. This makes GANs especially effective for generating sharp and detailed images. VAEs, on the other hand, take a different approach. They compress data into a latent space (a smaller, abstract representation) and then reconstruct it, allowing them to produce diverse variations. This makes them particularly useful for working with complex or noisy datasets.

The standout advantage of these models is their ability to produce high-quality, varied synthetic datasets. These datasets can be used to improve AI training while ensuring privacy, making them a go-to solution for scenarios where sharing or using real data isn’t an option.