Customer Consent in AI: Why It Matters

Customer consent in AI is about users agreeing to how their data is collected and used. This is critical for legal compliance, ethical practices, and building trust. Regulations like GDPR, CCPA, and upcoming state laws (e.g., Texas’s TRAIGA in 2026) mandate explicit consent, especially for sensitive data like biometrics. Companies face challenges like managing consent across platforms, avoiding excessive requests, and simplifying opt-out processes.

Key takeaways:

- Explicit consent is required by laws like CCPA and Illinois’ BIPA.

- Consent fatigue from too many prompts reduces user trust.

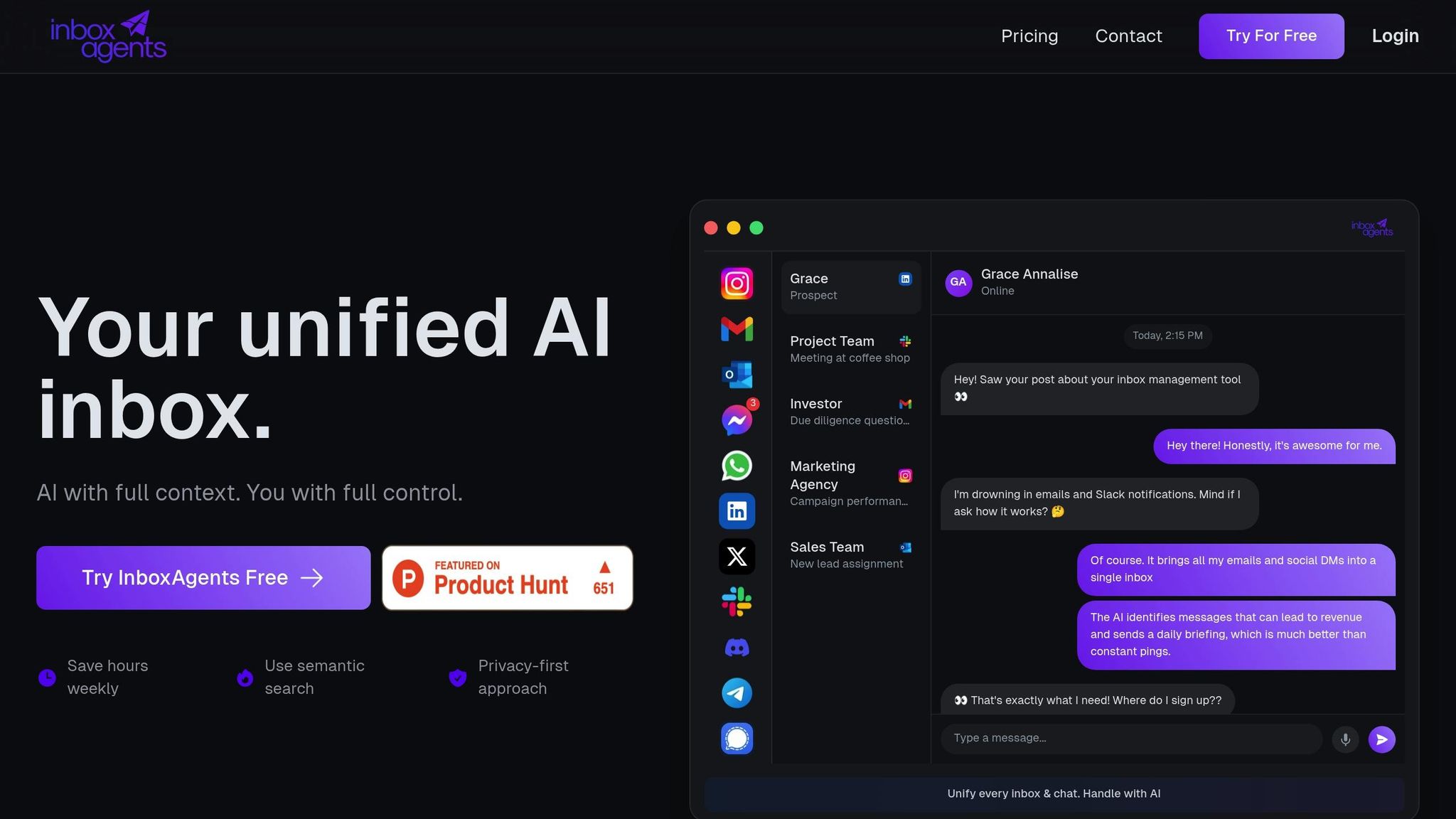

- Centralized tools like Inbox Agents simplify consent tracking.

- Collect only necessary data to streamline compliance and improve user experience.

Proper consent systems aren’t just about meeting legal requirements - they’re about ensuring users feel secure and in control of their data.

U.S. Laws That Require Customer Consent

Major Laws and Regulations

In the United States, there isn’t a single federal law governing AI consent. Instead, businesses must navigate a mix of state-specific and industry-focused regulations.

California has taken a leading role with the California Consumer Privacy Act (CCPA) and the California Privacy Rights Act (CPRA). The CPRA, which went into effect in 2023, requires businesses to provide transparency about data collection, allow consumers to opt out of data sharing, and delete personal information upon request. Failing to comply can result in fines of up to $7,500 per intentional violation and $2,500 per unintentional violation.

Texas will introduce the Responsible Artificial Intelligence Governance Act (TRAIGA) on January 1, 2026. This law mandates clear disclosure and informed consent for biometric data collection while banning discriminatory AI practices. Penalties range from $10,000 to $200,000 per violation, with additional daily fines for ongoing noncompliance. TRAIGA also includes a regulatory sandbox where companies can test AI systems in a controlled environment.

Illinois’ Biometric Information Privacy Act (BIPA) is one of the strictest in the country. It requires written consent before collecting or using biometric data, such as facial recognition or fingerprints, in AI systems. BIPA also allows individuals to sue companies directly for violations, making it unique among U.S. laws.

Utah’s Artificial Intelligence Policy Act focuses on disclosure. Businesses must inform customers when AI is used in interactions, particularly for regulated services. If a consumer specifically asks about AI’s role in a service, companies are required to provide additional disclosures.

Other states, including Maine, Massachusetts, New York, Hawaii, Idaho, Arkansas, Montana, and Pennsylvania, have passed or proposed laws requiring disclosure and consent for AI use, particularly in high-risk or sensitive scenarios.

At the federal level, the Federal Trade Commission (FTC) has issued guidance emphasizing fairness and accuracy in AI systems. While the FTC doesn’t mandate consent, its recommendations stress avoiding discrimination and ensuring transparency in automated decision-making.

| State/Law | Consent Requirement | Disclosure Requirement | Biometric Data Rules | Enforcement/Penalties |

|---|---|---|---|---|

| California (CCPA/CPRA) | Opt-out, deletion, transparency | Disclosure for automated decisions | Regulates sensitive data | Up to $7,500/violation |

| Texas (TRAIGA) | Informed consent for biometrics | Clear, conspicuous disclosure | Written consent required | $10,000–$200,000/violation |

| Illinois (BIPA) | Written consent for biometrics | - | Strictest in U.S. | Private right of action |

| Utah | Disclosure for regulated services | Disclosure if asked | - | State enforcement |

These diverse state laws create a challenging regulatory environment for businesses.

Compliance Difficulties for Companies

Meeting these legal requirements isn’t easy for companies. The fragmented nature of state laws stretches compliance teams thin, especially as regulations continue to evolve.

Take messaging platforms like Inbox Agents, for example. They must implement consent mechanisms that align with multiple state laws, all while keeping interfaces user-friendly. Adding third-party integrations makes things even trickier, requiring careful tracking of data flows and clear communication with users.

Another challenge lies in obtaining explicit consent without limiting access to services. Regulations often prohibit tying essential services to consent for non-essential data processing, even though some data collection is crucial for functionality.

Updating consent processes is resource-intensive. As laws change, companies must constantly adapt their practices, which can mean hiring dedicated compliance staff and increasing operational costs. The lack of standardization across states adds to the complexity. A system that works for California might not meet Texas’ requirements, forcing businesses to create multiple workflows or adopt the strictest standards across the board.

Some states, like Texas, offer safe harbor provisions to ease the burden. For example, companies following recognized risk management frameworks, such as NIST guidelines, may face reduced penalties. However, implementing these frameworks requires additional expertise and investment.

Why Is Consent Management Vital For Ethical AI Data Use? - AI and Technology Law

Common Problems With Consent Systems

Even with strict legal requirements, setting up effective consent systems remains a tough task. Missteps can lead to compliance issues and erode customer trust.

Too Many Consent Requests

One of the biggest hurdles in consent management today is consent fatigue. According to a 2023 Forrester study, the average U.S. consumer faces dozens of consent requests every week across various digital platforms.

For AI-driven services, the problem is even worse. Take an AI messaging platform, for example. A single user might encounter separate consent prompts for conversation analysis, automated responses, data storage, third-party integrations, and marketing communications. These repeated interruptions not only disrupt user workflows but also lead to lower opt-in rates and more customer complaints. In some cases, they even drive users away.

When overwhelmed, users often resort to clicking "accept all" without fully understanding what they’re agreeing to. This undermines the entire purpose of informed consent. A 2023 Cisco Consumer Privacy Survey found that 81% of consumers worry about how companies handle their data, and 76% say they wouldn’t buy from a company they don’t trust. Bombarding users with too many prompts can deepen this distrust.

These issues make it hard for businesses to maintain consistent consent practices across their platforms.

Managing Consent Across Different Platforms

Most businesses rely on a mix of email marketing, SMS, social media messaging, website chat, mobile apps, and third-party tools. Each of these channels comes with its own consent requirements, technical quirks, and user interfaces. Synchronizing consent preferences across all these touchpoints is no small feat. For instance, if a customer opts out via email, that preference should automatically apply to SMS and app notifications too. But in reality, differences in data formats, outdated systems, and irregular update schedules often get in the way.

To tackle this issue, some companies are turning to unified platforms like Inbox Agents. These tools centralize consent records, making it easier to ensure consistency across all channels.

The challenge of managing consent across platforms highlights the importance of streamlining the process for users.

Making Consent Easy to Find and Cancel

Even when businesses collect consent properly, they often fail to make it simple for users to manage or revoke their preferences later. The proposed AI CONSENT Act directly addresses this by requiring that users be able to grant or withdraw consent at any time through a clear and accessible process.

Unfortunately, many companies bury consent settings deep within menus, forcing users to navigate through multiple pages. This creates frustration and confusion. Legal teams often draft consent forms with complex, jargon-filled language, leaving users unclear about how their data is being used. A 2022 Pew Research Center study revealed that 59% of Americans don’t understand what companies do with their data.

In some cases, withdrawing consent requires users to contact customer support, send emails, or complete lengthy verification steps. The AI CONSENT Act proposes that revoking consent should be as simple as granting it.

| Common Consent Problems | Impact on Users | Business Risk |

|---|---|---|

| Too many requests | Overwhelm, abandonment | Low opt-in rates, compliance risks |

| Multi-platform complexity | Confusing experiences | Regulatory issues, customer dissatisfaction |

| Hard-to-find settings | Frustration, lack of control | Legal exposure, loss of trust |

These challenges are driving more businesses to adopt centralized consent management tools. By unifying consent across platforms, simplifying interfaces, and automating compliance, companies can better respect customer privacy while still leveraging AI technology effectively.

sbb-itb-fd3217b

How to Set Up Better Consent Systems

Building a strong consent system isn’t just about ticking legal boxes - it’s about creating a user experience that’s transparent, easy to navigate, and inspires trust. Done right, these systems can increase opt-in rates, reduce complaints, and keep your business compliant with regulations. Here’s how to design, manage, and evaluate a consent framework that works.

Creating Clear Consent Forms

A solid consent system begins with forms that are simple and easy to understand. Instead of hiding consent language in dense legal documents, successful companies make these forms stand out, separate from terms of service. This ensures users can focus on understanding how their data will be used.

- Use plain language. Avoid jargon like "algorithmic processing" or "machine learning optimization." Instead, say something like, "We use your messages to improve our AI responses." This makes your intentions clear, which builds trust with users.

- Design intuitive options. Make sure opt-in and opt-out buttons are equally visible. Many companies use toggle switches that show clear "on" and "off" states, making it obvious what users are agreeing to.

- Offer layered information. Start with a quick summary of what data you’re collecting and why, then include links for users who want more detail. This approach caters to both users who want to make fast decisions and those who prefer to dig deeper.

For instance, a major U.S. bank saw a boost in customer trust and compliance by using a dashboard-based consent system. They kept the language simple, provided granular controls, and offered a one-click opt-out option. This case highlights the importance of transparency and user control in creating a positive experience.

Using Inbox Agents for Consent Management

Managing consent across multiple platforms can be chaotic, but centralized tools like Inbox Agents simplify the process. This platform brings all messaging channels - email, SMS, social media, and chat - into one interface, making consent management consistent and efficient.

- Automated consent prompts. Inbox Agents automatically includes consent requests in customer interactions when required, ensuring compliance without extra manual effort.

- Centralized tracking. If a customer opts out on one channel, their preference is automatically applied across all communication methods.

- AI-powered features. For customers who have opted in, the platform’s smart replies and summaries can include consent status, ensuring AI responses are only sent to those who’ve agreed.

"We never use your messages for advertising or to train generalized AI models. We comply with GDPR, CCPA, and Google API Services policies. All data processing is done solely to provide the features you enable."

This kind of system not only simplifies compliance but also ensures users feel secure about how their data is being handled.

Collecting Less Data and Checking Privacy Risks

One of the simplest ways to reduce consent complexity and legal risks is to collect only the data you truly need. When you minimize data collection, consent forms become shorter, storage costs drop, and the impact of potential breaches is reduced.

- Stick to the purpose. Only use data for the specific functionality that users have agreed to. Avoid repurposing data for advertising or training broader AI models. This builds trust and makes your consent process simpler.

- Conduct regular privacy reviews. Schedule privacy risk assessments to identify potential compliance issues before they escalate. These reviews should cover what data you’re collecting, how it’s being used, who has access to it, and whether consent covers all activities. Many companies conduct these reviews quarterly, though some industries may require more frequent checks.

- Document everything. Keep detailed records of consent events, including the date, time, method, and specific disclosures shown to users. Automated systems can help maintain accurate logs, making audits and compliance reporting much easier.

When companies adopt data minimization strategies, they often find their consent forms naturally become more user-friendly. With less data to explain and fewer uses to disclose, the process feels simpler and more transparent for users. This creates a positive feedback loop: better privacy practices lead to better user experiences, which in turn strengthen customer trust and loyalty. By focusing on these measures, businesses can ensure compliance while building stronger relationships with their users.

Comparing Different Consent Methods

Not all consent systems are created the same. Each method offers varying levels of user control, legal safeguards, and operational efficiency. Choosing the right approach depends on a company’s specific needs and the regulatory framework they operate within.

In the U.S., consent methods span from blanket consent to just-in-time systems. Explicit opt-in offers strong legal protection under laws like the CCPA and other state-specific regulations. On the other hand, implicit opt-out consent, often seen in cookie banners or email campaigns, assumes user agreement unless they actively decline. While this method is easier to implement, it carries greater regulatory risks and may not comply with stricter state laws.

Granular consent lets users decide how their data is used - for instance, agreeing to AI-driven customer service but rejecting its use for marketing analytics. This approach builds trust but requires more complex technical systems. Meanwhile, just-in-time consent requests permissions at the moment data is collected, offering transparency but potentially disrupting workflows.

Consent System Feature Comparison

The effectiveness of consent methods varies across business and compliance metrics. Choosing the right system can mean the difference between smooth operations and costly regulatory penalties. For instance, under Texas's Responsible Artificial Intelligence Governance Act (TRAIGA), which takes effect in January 2026, violations could cost companies between $10,000 and $200,000 per incident.

| Consent Method | Opt-in Visibility | Cancellation Ease | Legal Compliance Level | User Control | Regulatory Risk | Typical Use Cases |

|---|---|---|---|---|---|---|

| Explicit Opt-in | High | High | High | High | Low | Sensitive data, biometric collection, AI training |

| Implicit Opt-out | Medium | Medium | Medium | Medium | Medium | Marketing emails, general analytics |

| Granular Consent | High | High | High | High | Low | AI personalization, multi-purpose data |

| Just-in-Time | High | High | High | High | Low | Real-time data collection, chatbots |

| Blanket Consent | Low | Low | Low | Low | High | Legacy systems, basic terms of service |

These comparisons highlight how different methods align with specific needs. For example, explicit opt-in systems are ideal for high-stakes industries like finance and healthcare, where regulatory compliance is non-negotiable. These systems provide clear records of user consent but can reduce initial sign-up rates since users must actively engage with the process. Meanwhile, granular consent is particularly effective for AI-driven businesses that collect data for multiple purposes. Users often appreciate the ability to allow certain uses, like improving customer service, while blocking others, such as advertising - leading to higher overall consent rates.

A centralized solution can simplify consent management across platforms. For instance, tools like Inbox Agents streamline this process by syncing consent preferences across email, SMS, social media, and chat channels. If a customer updates their preferences in one channel, the changes automatically apply to others. This unified approach not only simplifies management but also ensures consistent compliance.

Using clear, straightforward consent forms with visible opt-in and opt-out options can enhance user satisfaction and reduce compliance risks. In contrast, practices like pre-checked boxes or hidden cancellation processes often invite regulatory scrutiny and customer dissatisfaction.

For businesses operating in multiple states, it’s critical to recognize that consent requirements can vary widely. While California’s CCPA grants consumers opt-out rights for data sharing, Texas’s upcoming TRAIGA demands more explicit disclosures and consent for AI-related interactions. Companies relying on blanket consent systems often struggle to meet these diverse requirements, whereas granular systems are better suited to adapt.

Conclusion: Building Customer Trust With Proper AI Consent

Gaining customer consent in the realm of AI isn't just about ticking legal boxes - it's about laying the groundwork for meaningful, long-term relationships. When businesses handle consent thoughtfully, they gain more than compliance - they gain trust, loyalty, and a real edge in the marketplace.

The numbers make it clear. Recent surveys show that 79% of U.S. adults want to know when they're interacting with AI, and 68% are more likely to trust companies that are upfront about its use. This isn't just a nice-to-have; it's a business opportunity. Companies that prioritize transparency in their consent practices often experience fewer data-related complaints and see improved customer retention rates.

The stakes are even higher with new regulations. For instance, Texas's AI consent law introduces tough penalties for non-compliance, and similar laws are emerging across the country. Mishandling consent could be costly, but doing it right offers tremendous value.

Clear, easy-to-understand consent forms and straightforward opt-out options aren't just good practice - they're essential. These steps not only satisfy legal requirements but also enhance customer satisfaction, turning what could feel like a compliance task into a trust-building opportunity.

Consent should be seen as an ongoing dialogue, not a one-and-done event. Tools like Inbox Agents simplify this process by centralizing consent management across all communication channels. When customers update their preferences, these changes are applied seamlessly across the board. This approach not only reduces administrative headaches but also gives customers the control they expect. By aligning with evolving regulations, businesses can shift consent from being a legal formality to a cornerstone of ethical data handling.

Companies that adopt transparent and straightforward consent practices are better equipped to navigate new regulations, avoid penalties, and, most importantly, earn the trust of their customers. In a world increasingly shaped by AI, consent isn't just about getting permission - it's about building a partnership that fosters loyalty and long-term success.

FAQs

How can businesses ensure customer consent is managed effectively across platforms while following state-specific laws?

Managing customer consent across various platforms while adhering to different state laws can be a complex task. The best way to tackle this is by using centralized consent management systems. These systems allow businesses to track, update, and store consent preferences in one unified location. This not only ensures consistency but also makes navigating compliance with diverse regulations much simpler.

For state-specific laws like California's CCPA or Virginia's CDPA, staying up-to-date with the latest requirements is crucial. One effective approach is to use dynamic consent forms that automatically adjust based on the user's location. Tools powered by AI, such as those offered by Inbox Agents, can play a pivotal role here. They help automate the process of consent tracking, making it easier to maintain compliance across all communication channels.

Equally important is maintaining clear and transparent communication with customers. Always let them know how their data will be used and offer straightforward options to modify or withdraw their consent whenever they choose.

What risks do companies face if they don’t get proper customer consent for AI data usage?

Failing to secure proper customer consent for AI data usage can spell trouble on multiple fronts - legal, financial, and reputational. Companies risk facing steep fines or lawsuits for breaching privacy laws such as the California Consumer Privacy Act (CCPA) or the General Data Protection Regulation (GDPR), depending on where they operate. But the fallout doesn’t stop there. Mishandling consent can also erode customer trust, potentially leading to lost business and a tarnished brand image.

On the flip side, being transparent about consent isn’t just about compliance - it’s about showing respect for customer privacy. Clear, easy-to-understand consent processes can help businesses strengthen relationships with their customers while staying ahead of legal requirements. It’s a win-win for both sides.

How can businesses reduce user consent fatigue while staying compliant with AI regulations?

To tackle consent fatigue and stay aligned with AI-related regulations, businesses should aim for consent processes that are simple, clear, and user-focused. Use plain, straightforward language in consent requests, steering clear of technical jargon. This way, users can easily grasp how their data will be handled.

One effective approach is to introduce layered consent. This setup lets users see the key details upfront while offering the option to dive into more in-depth explanations if they want. Giving users flexible choices - like the ability to opt in or out of specific data uses - can also build trust and show a commitment to transparency.

It’s important to regularly review and refine consent practices to meet changing regulations and user expectations. By focusing on clarity and empowering users, businesses can ease consent fatigue while staying compliant.