Training AI Models with Privacy in Mind

AI models can be trained while protecting user privacy. Techniques like federated learning, differential privacy, and encryption ensure sensitive data stays secure during the process. These methods prevent raw data exposure, comply with regulations like GDPR and CCPA, and address risks like data breaches, model inversion, and membership inference attacks.

Here’s what you need to know:

- Federated Learning: Keeps data on user devices, sharing only model updates.

- Differential Privacy: Adds noise to data or outputs, reducing data re-identification risks.

- Homomorphic Encryption & SMPC: Perform computations on encrypted data or securely collaborate without exposing raw data.

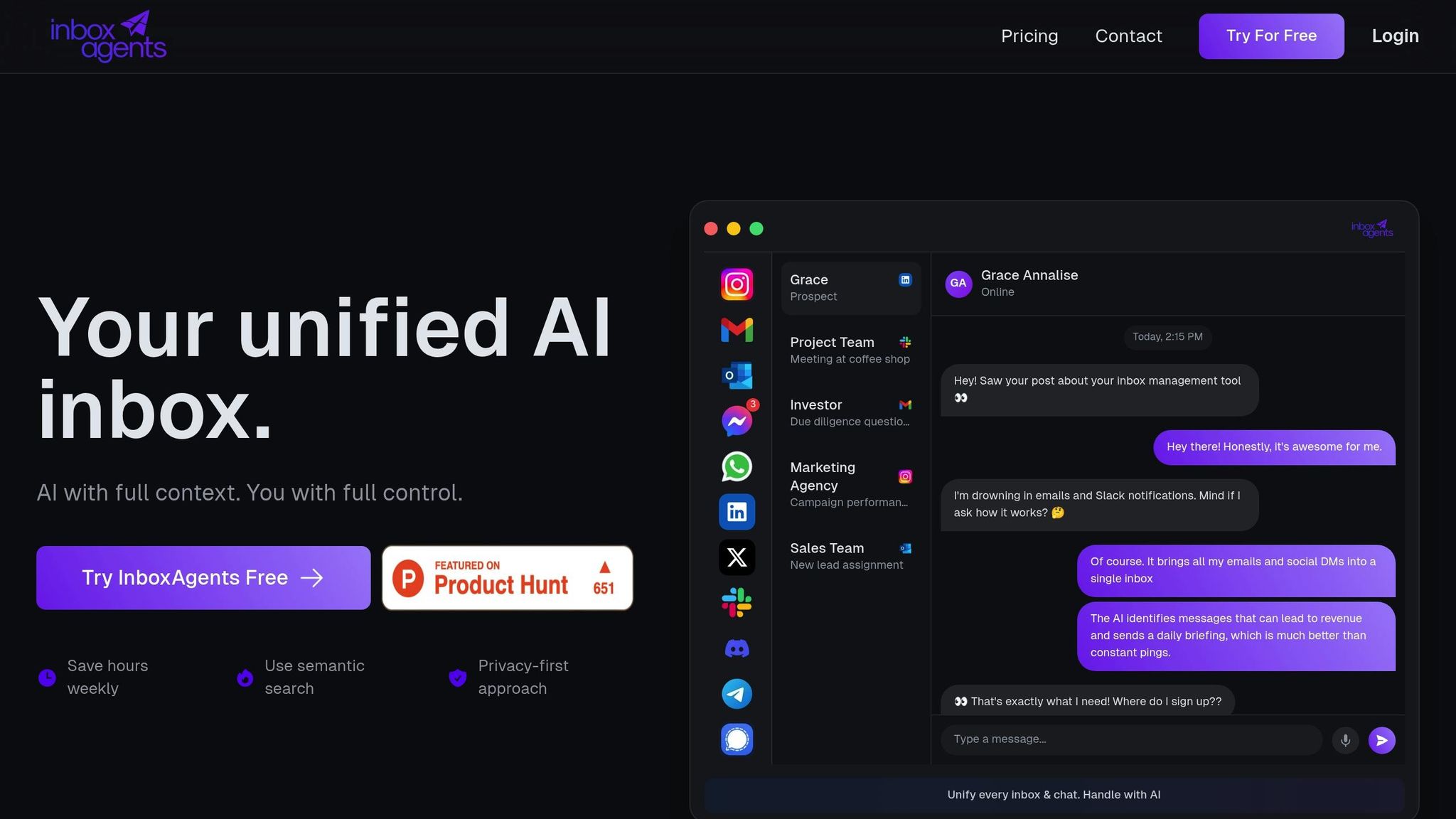

Platforms like Inbox Agents use these strategies to provide features such as smart replies and spam filtering while safeguarding user data. They prioritize encryption, strict access controls, and compliance with privacy laws, ensuring sensitive information is never misused.

Key Takeaway: Privacy-preserving AI is essential for building trust, meeting legal requirements, and mitigating risks. Combining these techniques with regular audits and monitoring strengthens data security and ensures ethical AI development.

Privacy Preserving AI (Andrew Trask) | MIT Deep Learning Series

Core Privacy-Preserving Techniques for AI Training

Preserving privacy during AI training involves methods like federated learning, differential privacy, and cryptographic techniques such as homomorphic encryption and Secure Multi-Party Computation (SMPC). Each addresses specific privacy concerns, and organizations often combine them for added security. These strategies safeguard data throughout the AI training process, from collection to deployment.

Federated Learning

Federated learning changes the way AI models are trained by keeping sensitive data on local devices or servers. Instead of sending raw data to a central location, this method trains models directly on distributed devices, sharing only the updated model parameters with a central server.

Major tech companies use federated learning to ensure user data stays on-device during training. For instance, platforms like Inbox Agents could use this approach to develop features like smart replies or abuse detection directly on user devices. This ensures that private message content never leaves the user's environment.

While federated learning improves privacy and allows the use of diverse data sources without running afoul of regulations, it comes with its own set of challenges. These include the need for constant communication between devices and servers, variations in the quality of local data, and ensuring that shared model updates don’t inadvertently expose sensitive information. For platforms like Inbox Agents, this technique reduces risk while enabling advanced on-device features.

Differential Privacy

Differential privacy uses mathematical methods to protect individual data by introducing carefully calibrated noise into datasets or model outputs. The goal is to ensure that the inclusion or exclusion of a single individual's data has minimal impact on the overall result. Companies like Microsoft and Google have adopted this approach through tools like the SmartNoise framework and the RAPPOR system.

This technique comes in two flavors: local differential privacy, which adds noise directly on the user’s device for maximum privacy, and central differential privacy, which introduces noise during data aggregation to balance privacy with utility.

By masking individual contributions, differential privacy helps defend against attacks like membership inference or model inversion. It also allows organizations to quantify their privacy measures, which is particularly useful for meeting regulatory requirements and assessing risks.

Homomorphic Encryption and SMPC

Cryptographic methods provide another layer of protection beyond statistical noise.

Homomorphic encryption allows computations to be performed directly on encrypted data, ensuring that sensitive information remains protected even during processing. While this approach is highly secure, it requires significant computational resources, making it less practical for large-scale operations.

Secure Multi-Party Computation (SMPC) enables multiple parties to collaborate on training AI models without exposing their individual datasets. Through advanced cryptographic protocols, each participant contributes data securely, ensuring no one gains access to raw information from others.

These techniques are especially valuable in fields like healthcare or finance, where strict regulations make traditional data sharing risky. However, both homomorphic encryption and SMPC are resource-intensive and can be difficult to scale with large datasets or complex models. For platforms like Inbox Agents, these methods are critical when handling highly sensitive communications.

| Technique | Data Exposure Risk | Computational Cost | Ideal Use Cases | Main Limitation |

|---|---|---|---|---|

| Federated Learning | Low | Moderate | Mobile apps, healthcare | Communication overhead |

| Differential Privacy | Very Low | Low–Moderate | Analytics, public data | Privacy–utility trade-off |

| Homomorphic Encryption | Very Low | High | Financial collaborations | Resource intensive |

| SMPC | Very Low | High | Research across institutions | Scalability challenges |

Choosing the right technique depends on factors like privacy needs, computing power, and regulatory guidelines. Many organizations find success by combining approaches - for example, pairing federated learning with differential privacy - to create secure and practical solutions.

Implementing Privacy-Preserving Techniques in Practice

Bringing privacy-preserving methods from concept to reality in SaaS demands careful planning. This involves addressing various data types, meeting regulatory requirements, and maintaining high-quality standards.

Data Collection and Anonymization Strategies

To protect user privacy, removing personally identifiable information (PII) is a crucial first step. This involves scanning datasets to strip out details like names, email addresses, and phone numbers before any model training begins. Techniques like data masking and tokenization go a step further by replacing sensitive details with synthetic substitutes that retain the structure of the data but not the actual values.

Another effective method is local differential privacy, where mathematical noise is added to data directly on user devices before it's transmitted. This ensures that even if the data is intercepted, individual contributions remain secure. Additionally, synthetic data generation creates artificial datasets that mimic the statistical properties of real data, enabling model training without exposing actual user information.

Once data has been anonymized, the next step is to choose privacy-preserving techniques that align with your platform's specific risks and operational needs.

Choosing the Right Technique

Selecting the right method depends on factors like data sensitivity, regulatory compliance, and operational constraints. Conducting a detailed risk assessment is essential to identify specific privacy challenges.

Each technique has its strengths and is suited to particular scenarios. For example:

- Federated learning is ideal for situations where data cannot leave user devices.

- Differential privacy works well for large-scale analytics requiring mathematical privacy guarantees.

- Homomorphic encryption or secure multi-party computation (SMPC) are best for highly sensitive, collaborative tasks.

Before full-scale deployment, pilot testing can help evaluate performance, privacy protection, and resource demands, ensuring the chosen approach meets both technical and privacy goals.

Practical Applications for Inbox Agents

Inbox Agents exemplifies how privacy-preserving methods can enhance functionality while safeguarding user data. The platform's privacy-first design ensures that user messages are never used for advertising or training generalized AI models.

"InboxAgents uses a privacy-first approach with encryption in transit and at rest, strict access controls, and industry-standard security practices. We never use your messages for advertising or to train generalized AI models. We comply with GDPR, CCPA, and Google API Services policies. All data processing is done solely to provide the features you enable." – InboxAgents

For example, federated learning powers smart reply features by training models directly on user devices. Instead of sharing raw data, only encrypted model updates are transmitted, allowing the system to learn communication patterns locally. Similarly, differential privacy enables automated inbox summaries and usage analytics by adding noise to aggregated data, ensuring that user behavior is analyzed without exposing individual activities.

To tackle spam filtering, secure multi-party computation allows multiple organizations to collaborate on abuse detection without sharing actual message data. This layered approach ensures that privacy risks are addressed at every stage of the AI training process.

Continuous monitoring further strengthens privacy by enforcing strict access controls, encryption, and compliance with GDPR, CCPA, and Google API Services policies. Regular audits ensure these techniques remain effective as the platform scales and new features are introduced.

sbb-itb-fd3217b

Privacy Auditing and Compliance for AI Models

Privacy audits play a key role in identifying regulatory risks and potential data breaches. These assessments ensure that data collection, processing, and storage align with U.S. regulations such as the California Consumer Privacy Act (CCPA) and HIPAA, as well as international frameworks like the GDPR and the EU AI Act. By building on established privacy practices, audits help maintain consistent data protection throughout the AI lifecycle.

Conducting Privacy Audits

A solid privacy audit starts with mapping out how data flows through your AI system. This means tracking where data enters, how it’s processed, and where it’s stored. By identifying every touchpoint, you can spot vulnerabilities and areas of risk.

The next step is to classify personal data. Identify key fields such as names, emails, phone numbers, and other sensitive details that could expose individual identities. This classification helps you apply the right privacy protections to different types of data.

Review data minimization practices to ensure your models only use the information necessary for their purpose. Confirm that anonymization techniques are properly implemented, and check that your training processes incorporate privacy-preserving methods like differential privacy or federated learning.

Access controls and security protocols should also be part of the audit. Assess who can access training data, how permissions are managed, and whether encryption safeguards data during storage and transmission. Document all findings and corrective actions for compliance reporting. This process reinforces a privacy-first approach to AI model development.

Tools and Frameworks for Compliance

Once you’ve outlined the audit process, it’s time to leverage tools and frameworks that support ongoing compliance. Frameworks like the NIST Privacy Framework provide structured guidance, while tools such as TensorFlow Privacy and Microsoft’s SmartNoise help implement privacy measures. Audit management platforms can further streamline tracking, reporting, and documentation.

For privacy-preserving methods, open-source libraries offer practical solutions. Frameworks like PySyft and TensorFlow Federated enable techniques like federated learning and secure computation. These tools allow you to train models without centralizing sensitive data, ensuring compliance while maintaining a clear documentation trail for regulatory reviews.

Continuous Compliance Monitoring

Maintaining privacy compliance is not a one-and-done task - it requires continuous effort. Regularly updating privacy practices to reflect evolving regulations and emerging risks is essential. Static measures can quickly become outdated, making ongoing reviews a necessity.

Automated monitoring systems can provide real-time insights by tracking data access patterns and flagging unusual activity. Alerts for potential violations or anomalies help address issues promptly. Scheduling periodic reviews, especially after model updates or new feature releases, ensures that privacy protections remain effective.

Embedding compliance checks into the AI development lifecycle keeps privacy front and center when introducing new features. Regular training for development and operations teams on current privacy requirements and best practices further strengthens your privacy strategy.

For example, if a messaging platform introduces AI-powered features like smart replies or spam filtering, audits should confirm that these additions respect user privacy while delivering their intended functionality. Each audit builds on previous findings, creating a more robust and scalable approach to privacy as your platform evolves and adopts new AI capabilities.

Detecting and Fixing Privacy Violations

Even with careful AI development, privacy violations can sometimes slip through the cracks. When they do, quickly identifying and addressing these issues is essential for maintaining user trust and staying compliant with regulations. To achieve this, organizations need solid systems that can spot problems early and respond effectively.

Identifying Privacy Risks

Simulated privacy attacks are a proactive way to uncover vulnerabilities in AI models before they go live. These tests mimic real-world attack methods to see if sensitive information can be extracted.

Membership inference attacks are one such method. They test whether an attacker can figure out if a specific individual's data was part of the model's training set. A 2023 NIST report highlighted that unprotected models can be vulnerable, with such attacks succeeding up to 80% of the time. These simulations work by feeding the model known and unknown data points, then analyzing the responses to uncover patterns that reveal training data membership.

Model inversion attacks take a different route, attempting to reconstruct input data from the model's outputs. For example, attackers might try to reverse-engineer personal messages, financial records, or health data from AI-generated responses. This risk is particularly concerning for messaging platforms, where sensitive conversations could potentially be pieced together from automated summaries or smart reply suggestions.

To catch these risks, output audits are crucial. These audits involve scanning model responses for any traces of personally identifiable information (PII), confidential business data, or other sensitive details that should not appear. Automated tools like Google's TensorFlow Privacy and Microsoft's SmartNoise can streamline this process. These tools include built-in simulations and risk scoring systems, making it easier to regularly assess vulnerability levels.

Once vulnerabilities are identified, immediate action is needed to prevent further issues.

Fixing Privacy Violations

The first step is to immediately halt the deployment of the affected model to stop any additional data exposure. In some cases, this might mean temporarily disabling AI-powered features while fixes are implemented.

Retraining the model with enhanced privacy techniques is a key solution. For instance, differential privacy can significantly reduce the success rate of inference attacks - from 80% to under 5%. This method introduces mathematical noise to the training data or outputs, making it nearly impossible for attackers to pinpoint specific individuals.

Federated learning is another powerful tool. It keeps raw data on user devices, rather than centralizing it, which is particularly useful for platforms that handle sensitive communications, like Inbox Agents.

Finally, affected users should be notified promptly, adhering to legal requirements.

The importance of swift action is clear. A 2022 industry survey revealed that over 60% of organizations in regulated sectors have adopted privacy-preserving methods like federated learning or differential privacy to meet compliance needs. This highlights a growing understanding that addressing privacy violations reactively is far more costly than preventing them in the first place.

Summary of Privacy Violations and Solutions

Here’s a quick breakdown of common privacy issues and their fixes:

| Privacy Violation Type | Detection Method | Recommended Solution |

|---|---|---|

| Membership Inference Attack | Simulated attack testing, privacy tools | Apply differential privacy, retrain with noise injection |

| Model Inversion Attack | Reconstruct input data from outputs | Use federated learning, restrict model access |

| Data Leakage | Output audits, PII scanning tools | Strengthen anonymization, tighten access controls |

| Unauthorized Data Access | Access log analysis, anomaly detection | Update policies, enforce role-based permissions |

| Inadequate Anonymization | Data reviews, re-identification tests | Apply stronger anonymization, explore synthetic data |

The success of these solutions depends on proper implementation and consistent monitoring. Treating privacy response as a one-time fix often leads to recurring problems. A better approach is to integrate detection and remediation into every step of the AI development process. This way, privacy risks are continually evaluated and addressed as models evolve, ensuring a strong, privacy-first framework throughout the lifecycle of AI systems.

Conclusion: Building Privacy-Conscious AI Models

Key Takeaways

Creating AI models that prioritize privacy isn't just about meeting regulations - it's about building systems that users can trust with their sensitive data. This trust is achieved through strategies like keeping raw data on devices, applying differential privacy for mathematical safeguards, and using encryption techniques during computation.

The methods discussed earlier - federated learning, differential privacy, homomorphic encryption, and secure multiparty computation (SMPC) - work together to create a strong framework for privacy-by-design. Real-world applications show that these techniques are not only feasible but also scalable.

The best results come from combining multiple techniques instead of relying on just one. Regular audits and constant monitoring are critical because even the most carefully designed systems can face privacy challenges. Proactively identifying and addressing potential issues is key to maintaining user trust and meeting compliance standards.

These approaches do more than protect data - they establish long-term trust, which is invaluable for SaaS platforms.

The Role of Privacy in SaaS Platforms

For SaaS platforms, integrating privacy into every layer of AI functionality is non-negotiable. Platforms that handle sensitive user communications, for instance, see a direct link between privacy-conscious AI and user adoption. When users know their data is secure, they’re more likely to engage with AI-driven features.

Take Inbox Agents as an example: their privacy-first approach ensures messages are processed only for user-enabled features. This method tackles a major challenge for AI-powered platforms - offering personalized, intelligent features while maintaining strict data protection. By focusing solely on user-enabled processing and steering clear of unnecessary data exploitation, platforms can build the trust needed for long-term growth.

Continuous monitoring and regular audits reinforce user confidence by showing how technical safeguards translate into real protections. Beyond user trust, privacy-conscious AI also gives platforms an edge in heavily regulated industries. With increasingly stringent requirements like GDPR, CCPA, and the EU AI Act, embedding privacy from the start avoids costly redesigns and potential legal troubles.

Investing in privacy-preserving techniques does more than ensure compliance - it positions platforms to thrive in a market where users demand transparency. Platforms that demonstrate strong privacy measures, rather than just talking about them, will stand out as privacy awareness continues to grow across industries.

FAQs

How does federated learning help protect privacy while improving AI models?

Federated learning takes a fresh approach to privacy by letting AI models learn directly from decentralized data, eliminating the need to transfer it to a central server. Instead of pooling sensitive information in one place, the model is trained locally on individual user devices. Once trained, only the model updates - never the raw data - are sent to a central system.

This method keeps personal information secure while still allowing the model to grow smarter by tapping into diverse datasets. It’s especially useful in areas like healthcare and finance, where safeguarding sensitive data is critical.

What challenges come with using homomorphic encryption and secure multi-party computation (SMPC) in AI training, and how can they be addressed?

Homomorphic encryption and secure multi-party computation (SMPC) offer robust methods for ensuring privacy in AI training. However, they come with their own set of hurdles. Homomorphic encryption requires substantial computational resources to process encrypted data, which can significantly slow down training. On the other hand, SMPC involves coordinating multiple parties, which can lead to inefficiencies and potential communication bottlenecks.

To overcome these challenges, organizations can turn to optimized algorithms and specialized hardware accelerators tailored for privacy-preserving tasks. Another approach is to use hybrid models, blending techniques like encryption with differential privacy or federated learning. These strategies help reduce computational burdens while maintaining strong privacy safeguards. Striking the right balance between performance and security is key to making privacy-focused AI training both practical and effective.

How do techniques like differential privacy help ensure compliance with regulations such as GDPR and CCPA?

Privacy-preserving techniques, such as differential privacy, are essential for meeting the requirements of regulations like the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA). These methods introduce carefully calibrated noise into datasets, ensuring that individual identities remain protected while still allowing meaningful insights to be drawn from the data.

By protecting personal information, differential privacy adheres to core principles of GDPR and CCPA, like data minimization and safeguarding user identities. This approach not only helps organizations stay compliant with regulatory standards but also strengthens user trust. Furthermore, it promotes transparency and accountability - two pillars of privacy laws in the U.S. and globally.